| 96 kHz.org |

| Advanced Audio Recording |

|

A Virtual Analog Synthesizer in VHDL Physical modeling currently is a big subject in engineering. One the one hand, it might be used for simulating mechanical or electronical behaviour of circuits to analyze such systems and optimize attached components to cooperate with such systems. Electronical circuits like e.g. PLCs which are often used to control electromechanical systems, can be tested with the HIL (hardware in the loop) concept, since they run in real time (up to a given speed limit). Various test cases and the behavior of the environment or the user are applied and the response of the system is simulated.

Typically, HIL systems for real-time

simulations and emulations are created with microprocessors or

digital signal processors. Nowadays, however, FPGAs can also be used

for such applications, since FPGAs perform basic calculations much

faster and can process many "tasks" in parallel in real time. For

example, a current DSP operating at 80 MHz executes a classical

second-order differential equation describing a sine wave in more

than 1 microsecond because it requires more than 100 clock cycles

for RAM access, wait states, summation, multiplication, and storage

of the three basic parameters: position, velocity, and acceleration.

For adequate real-time operation, about 100 kHz sampling frequency

is used to correctly model a system with a bandwidth of 10-20 kHz

enough to simulate, for example, the behavior of a controller that

controls this system. Therefore, only 10 such equations could be

solved without exceeding the real-time limit.

This system was followed by the freely programmable DSP System "Chameleon" from Soundart Hot in 2004 because this comes already with audio compatible hardware like ADCs, DACs, rotary Controller and a programmable display.

The Chameleon perfectly

meets the requirements of sound creation, mixing and mastering studio.

They have 19inches rack size and pre defined structures for both real

time operation and ASM based sound processing on low level.

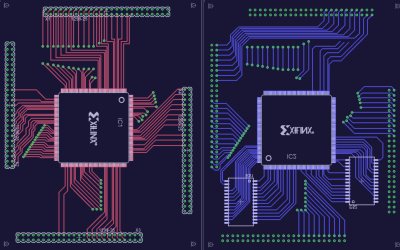

Virtual Analog Synthesizer in an FPGA

The DSP boards operate independently from each other and can be stacked in both directions (up/down and left/right) to create a versatile processor system with a true bus. It is also possible to link the boards in a subsequent way performing pipelined operation because interconnections are freely programmable in FPGAs.

Also the function of the virtual DSPs can be changed according to the individual needs: Depending on the desired functions, various operation modes of the synthesis module can be achieved. Regarding the wave generator, classical sound synthesis like SST and also VAM are possible. All sub modules like filters, oscillators and LFOs are interconnected via matrix operations which offers very complex routing options. The delay can be used for chorus, flange and stereo placing of the generated voice. The currently used FPGA operates at 16 MHz giving all the internal combinational logic enough time to operate correctly. Even with this low speed, the filters, which are of FIR-type and operate with 128 Taps sequentially can produce an output sample rate of 125kHz. At least 4-6 of such units are available in one FPGA. Using 64 Tap filters and focusing 48kHz, it is possible to operate fully pipelined and generate at least 4 channels / voices including wave generation, filtering and the feed back mixing. Using IIR-filters which are commonly used in virtual analog synthesizers, it is possible to generate 12 voices at 48kHz leading to around 50 complex voices in total. With the next generation FPGAs, like Spartan 3, it should be possible to run the same architecture with doubled speed and use 3 times re space in the FPGA, one module might serve 180 voices. A synthesis test with the Virtex 2 architecture for the largest available device already showed more than 160 voices for the same synthesizer topology and 120 for an assumed version with doubled complexity (6 filters, 8 LFOs per channel). Further progression can be achieved when optimizing the design for better pipelining.

Conclusion and Summary FPGAs typically run at lower speeds than DSPs when synthesis constraints are set that way that a balanced tradeoff between speed and area is focused where not too many additional FFs will have to be added in order to achieve the desired system frequency. Usually, this is about 3 times lower. On the other hand, FPGAs do process many operations within one single step where DSPs need 2 or more and thus come closer again to the DSPs in final data operation speed. However a ratio of 1:2 might persist at this point of view. But there is room for improvement: Because of full pipelined operation any residing clock cycle which is not required to complete the total number of operations of the channel which have to be done during one sample period can be used to generate more channels. Only a further set of variables / signals is required for this, so balancing the pipeline delay with the architecture width is required. Tweaking the internal architecture that way, that complex operations like filtering are done the parallel way, saves pipeline delay and latency and increases the used area only moderately, where doubling the number of voices in a DSP system requires up to the doubled operation frequency. For a synthesizer running on 24 MHz system speed, there is a theoretical pipeline budget of 500 clock cycles when focusing 48 kHz data speed. If the calculation pipeline uses 400 cycles to complete, 100 voices are possible. Partial architecture doubling rather than instantiating a second module might increase the used area by less than 100% but lower the delay down to 300 cycles leading to 200 possible voices. A DSP might still compare to this easily and process also 200 simple synthesizer voices like these. But increasing the DSPs system speed by 100% will lead to 400 voices "only" while a FPGA with 48MHz will produce 700 voices already. The higher the system speed, the greater is the advantage of the FPGA. Taking into account, that costs for FPGA area is about 3 to 8 times more expensive than equivalent DSP power, it is a question of time, when FPGAs will overcome DSPs making it possible to integrate more tricky features easily and maintaining the number of voices. Assuming more FPGA power being available in the future, thousands of voices will be available and make it possible to generate a lot of details of real acoustical instruments like a piano or a harp which have lots of strings with harmonics as well as many details of resonance in the wood. See my idea for an FPGA based piano. Apart from virtual analog modeling FPGAs can also be used to emulate real analog synthesis. For oscillators there is the possibility to make use of internal PLLs which are controlled by self running oscillators created in the FPGA which physically (not virtually) act. One approach was shown here: Oscillation the analog way. Read about the limits of VAM

Read an earlier article about VAM

|

| © 2004 J.S. |